|

Nothing makes me happier as an evaluator than clients who use and share the evaluation to improve their work and the work of others. It's the direct opposite of sending your report off to trustees or funders and forgetting about it. So I was delighted to find out Leeds Dance Partnership (LDP) had done exactly that. I recently completed their impact and process evaluation for the first four official years of the partnership - a three quarters of a million pound initiative supported by Arts Council England's (ACE) Ambition for Excellence scheme.

It was a very complicated partnership, programme and evaluation. Partners had to be honest, not only identifying successes, but also looking at where not everything had gone to plan. We included it all. Participants, the local and national dance sector, the Leeds cultural decision makers, and regional freelance artists all inputted to ensure a really balanced and practical set of perspectives. There was a lot to say about the achievements, pitfalls and learning along the way. And at the same time we wanted the report to be accessible, easy to find what different people needed. As soon as the report was completed, LDP sent it off to ACE ahead of a follow-up meeting. I rarely expect funders to read evaluation reports, knowing how stretched everyone's workload is. So I was delighted to hear ACE had not only read the report but also fed back their appreciation that "the report was more thorough than we expected - very good, and we welcomed the SWOT which explored the flaws as well as stating the positives." Those investing in your work really do want to see the learning process not just the good news stories (of course they want to see those too!). I thought that was the end of the story, but no. I was even more pleased when I received a message out of the blue via LinkedIn from an Organisational Development Consultant now working with Leeds Dance Partnership who said the report had been shared with her and, "I found this such a helpful and insightful piece of work that I wanted to write to say thank you as it has enabled me to engage with LDP faster and in a more informed way than would otherwise have been the case." LDP has also made the summary and full reports available for anyone via their website here or you can read it on screen / download directly from my own collection here. A variety of other examples of my evaluation reports are also available on the Example Reports page. So - these are just a couple of examples of what the point of evaluation is. It's a way to reflect, learn and evolve. It's a way to pass the memory of what happened, what worked and what didn't on from one set of people to another, to save time, stop reinventing wheels, and make the most of the resources you have. There are other reasons to do evaluation, do it well, and put it to good use. But making it publicly available and actively sharing it are a couple that really make me feel the work has been worthwhile.

0 Comments

One of the most common questions I'm asked is: can you help us with our evaluation?

My response to that is always: very probably, what do you want and what are the parameters you're thinking of regarding timeframes and budget? And what is it exactly that you want or need? Often, people don't exactly know. They know evaluation is a good thing, or at the very least that they should be doing / getting some. But sometimes that's all they know. So here are some things to consider when you want to commission some evaluation (or put it out for tender).

Firstly - if the evaluation is because an external funder expects / requires it, please do be prepared to let an evaluator see your application. They will treat it confidentially, but it is a very quick way for them to give you guidance on exactly what will work best for you. A good specialist won't be pushing a big sale, but they can help you decide which options are going to be best. Secondly - no matter how much you decide to outsource or not, an evaluator cannot do everything for you. You need a good, consistent, honest working relationship to get the best results possible. The more you put in and own it, the better the relationship and the results will be. Ideally it works as a partnership. All of this shows how I try and work with organisations wanting evaluation. This is what you can expect from me. You can also just say: "We have £x. We'd like X. Could you do that for us?" A few years back, I worked on a three year contract supporting organisational change in a group of universities who were starting to come to terms with a then brand new agenda, where academics and researchers needed to become more outwards facing, connecting with the public on their doorstep and at large. Part of my role was to mentor internal directors and project managers, and the departments they worked with, in looking for the impact of their activities. Like many major programmes, the initiative had a quite intense, technical, formal, robust evaluation system underpinning it. Like many organisations, this was not the fun part of anyone's work, and on top of everything else going on, was not generally what most people were interested in prioritising. In my mentoring role, I wanted to increase people's confidence about being able to carry out evaluation that was realistic and meaningful, and reduce their fear of becoming overwhelmed. At the same time, some of the community groups involved had been saying their previous experiences of evaluation in university programmes had, at times, been overwhelming, invasive, and one-sided.

As a result, I created a simple, practical, set of suggestions to make evaluation do-able, useful, positive and meaningful. It simply offered these ten top tips, and five years later with the huge amount of learning I've developed about evaluation, they still absolutely stand the test of time...

Image courtesy of Manchester Libraries, Information and Archives, Manchester City Council Image courtesy of Manchester Libraries, Information and Archives, Manchester City Council I've just recently started work on the evaluation of a year long programme hosted by Manchester Metropolitan University's Institute of Humanities and Social Science research. Entitled Creating Our Future Histories, the scheme sees 'early career researchers' (usually those who are completing a PhD, or are just about to start one / have recently finished one) working with Manchester community organisations. Each partnership is mentored by a more experienced academic. The partnerships are punctuated along the way by a series of weekend workshops combining into a professional development course on how community engagement between academics / researchers / communities might take shape. Each partnership is also expected to meet at least once between each workshop. The partner-groups are developing co-constructed plans and activities which research previously uncharted areas of the organisation's heritage, and look towards incorporating their future in a way which will become part of their heritage in years to come - there's the 'Future Histories' part. Late next Spring each group will showcase their findings in creative and public ways - many yet to be decided; though ideas are already circulating about film, video, exhibtions, time capsules and more. I'm about a month in and I'm once again struck by the many rich and hidden histories of Manchester - industry, architecture, battle and radical action, many many things which show the inventiveness and resilience of this sometimes bloody minded and often ingenius city. You can find out more about the project here and I particularly recommend the research group pages and project blogs to find out more about the organisations involved and the progress and reflections taking place. I'm just wrapping up some research for a museum. They asked me to collate case studies of good and innovative practice in how comparable venues (which in this case include medium-large scale museums and galleries) use digital technology to support school visits, in workshops, self-directed studies and potentially back in school.

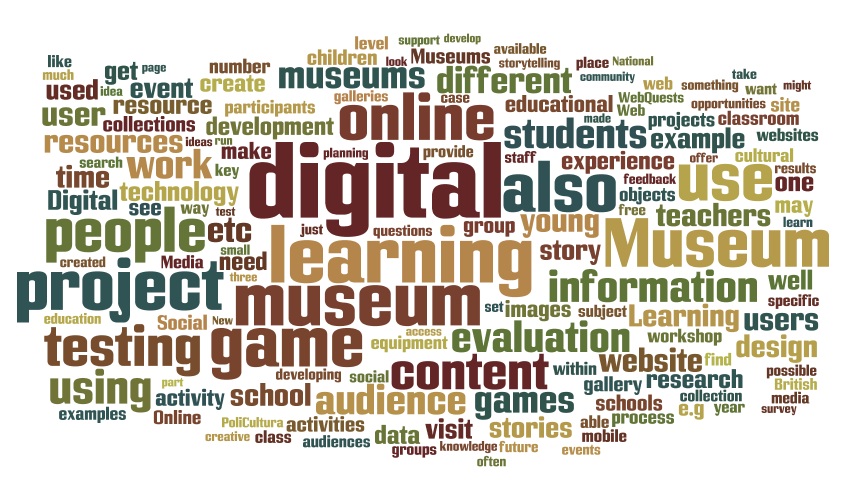

They also wanted to find out about the ways such activity can be evaluated. They absolutely do not want to have form after form handed to teachers and students, and wondered how else really good evaluation might take place. The brief contains phrases like blended learning and e-learning. It's problematic because there are no clear definitions of what those are and where they start and end, And it's a real rabbit hole - an entire and massive area of specialisation and expertise. It's a small piece of work, just skimming the surface to help the museum think in new and different ways about what they might do, and how to monitor its impact well. I've collated 64 pages, over 32,000 words, of case studies of applications, programmes, projects, reviews and industry expertise opinions on contextual issues such as evaluation, future proofing and general good practice in digital learning and engagement. I've visited more websites, read more conference papers, searched more forums than I can count and interviewed some really insightful and inspiring colleagues in the field. Eventually, if the museum in question has no objections, I'll upload the collated set of case studies and expertise here for anyone else who might like it. It will be in a very rough and ready format - just my research notes really, in no particular order. But it may be of some help to someone so watch this space... In the meantime, it seemed much easier to put all 32k+ words into wordle and see what happened. There it is above, that's what the whole shebang amounts to. Interesting at this stage that 'online' is so prominent, given that I wasn't specifically looking at just online options. Interesting too that if 'conversation', 'collaboration' or 'participation' are in there, they certainly don't jump out. I spent a huge amount of last year working with some amazing academic staff, researchers and community groups as they learned more about one another through creative projects as part of the Manchester Beacon for Public Engagement. My role was (and still is) to help what's happening at practice level link with a rather complex overarching evaluation framework. The Manchester Beacon programme is, in a nutshell, about encouraging learning institutions to better understand how to open themselves up to communities more effectively. An important part of that process is to trial new approaches and reflect on what works, or what could be improved. It's an action learning model really. The Beacon team and I identified that those involved in the practice needed support in being able to report back on their work in ways that fit the evaluation framework. So we set about producing some guidance for them, based on the input of community groups and a pilot cohort of academics and researchers. Fast forward many months and the evaluation guidance pack / toolkit I created with their help, and the help of other colleagues, is now freely available. It contains some basic principles of evaluation, hints and tips, templates, and examples of creative consultation. You can read or download it below; contact me for a copy; or read / download it here. On this page, you can also find some very short podcasts and top tips from some of the staff and community groups who have used the document. At the end of the pack there are also lots more links to further evaluation guidance in public engagement and also support created specifically for the fields of science communication; community engagement; arts and heritage; and health and wellbeing. All thoughts or comments welcome... I'm now part of the network exploring the SROI model of social returns on investment. It's way of putting monetary values on the sorts of evaluation and participation outcomes that occur in the projects I work with, and demonstrating what difference the investment has really made - what happened that coulnd't or wouldn't have happened without.

It's also a way for me to offer organisations the sort of robustness they might expect from an academic research team. A lot of it will be new to me but I'm hoping it will ad a layer of technical formality to the likes of MLA's Generic Social Outcomes. Indeed MLA have already commissioned large scale pilot evaluation projects testing SROI's capacity for analysing the values of specific library and museums schemes. Exploring qualitative outcomes, impacts and benefits is something I really enjoy. There are times though, when stakeholders need more quantifiable results and this should enable me to provide that too. SROI has been developed, and continues to be developed by it's members, to support arts and culture, public services, science and education - all of which are fields I work with. It also supports employment and business, environment and climate, and health and care. So I hope this means it will become a tool that is recognised across all organisations. I should add though, that for me this will be an additional way of identifying what works and where things can be improved. It won't be the only one. I don't think any single tool can really capture everything that's important about a project. It's important to create a variety of tools bespoke to each separate project to cross-reference information and help pick out trends, similarities, patterns but also individual stories - the illustrations of people who have really felt something change as a result of taking part. But this does offer a way to create evidence of quantifiable outcomes alongside the qualitative benefits I'm always keen to advocate based on evidence. Interestingly DEMOS have just released a report suggesting that the approaches underpinning SROI are sound though a range of shared outcome measurements across the public sector, which can be gathered more simply than SROI is able to, is desperately needed in order to help smaller organisations demonstrate their worth. I can't argue with that kind of logic, but until it arrives, SROI seems to be the closest there is to different sectors speaking to the same language. It's the start of a new learning journey for me, and a welcome addition to what I will be able to offer the organisations I work with. In evaluation, looking at outcomes is vital. When you've invested in a project of course you'll need to know what was achieved and where there are still gaps. But it can be cumbersome reading mounds and mounds of text in a report. Charts, graphs and percentages can help clarify, but can still make for dull reading (and for some feel too much like maths homework).

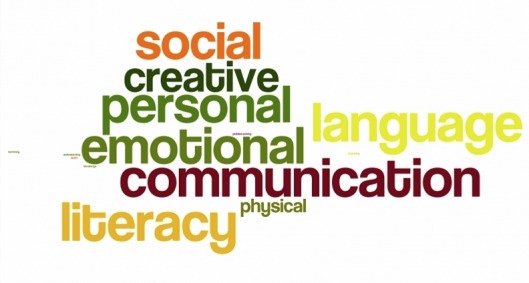

To help busy partners to any project, finding a variety of ways to report on activity can make all the difference between people remembering what went well and being able to advocate the value of their work... or not. I've recently been creating a toolkit for early years practitioners looking at how creative engagement can help achieve new and unexpected results. Its aim is to be quickly digestible and highly practical. The toolkit is based on the activity of ten creative early years projects in schools and Children's Centres in the North of England. The projects were mapped against the Early Years Foundation Stage six areas of learning. To illustrate which of the EYFS outcomes the projects really brought to life, I used wordle to create this at a glance illustration. The larger the word, the more presence it had in the projects. You can see here Personal, Social and Emotional development was the strongest feature across the programme overall. It doesn't replace the need for writing other information in the report of course. However the teachers, children's centre staff and creative practitioners involved, and readers of the toolkit, can now instantly see where the projects thrived and what kinds of outcomes similar work might expect to achieve, so much more quickly and easily than deciphering a big chunk of writing or trying to analyse a graph.  For several years there has been debate about the potential for using the arts to help improve literacy and numeracy (and other subjects). For many arts organisations being able to find ways to achieve this has been a necessity to survive. For some this raises discomfort, those who feel it's not what the arts are for and can run the risk of people losing sight of other benefits that perhaps are more intrinsic to artistic practice. Personally I don't choose one side or the other of the argument, there are truths and benefits (and no doubt pitfalls) either way. Though I do know this - for children and young people who for whatever reason are not as developed as their peers in language and numeracy skills, the arts can present a more accessible way to unpick learning that some other formats. I've seen it happen first hand. I can't say for sure it's specific to the arts - I do think it's something about a creative approach generally and the opportunity to work in different environments and include kinaesthetic activity. All of which is common, but not exclusive, to arts activity. However - last year I was asked to work with the inspirational arts producer Elizabeth Lynch to evaluate Performing for Success. An arts based project building on the proven achievements of Playing for Success (which used sports to improve young people's numeracy and literacy skills). It was a unique programme in that it met Extended School agendas and relied on partnerships between extended school deliverers experienced in sport, and arts or cultural organisations. However there was no national model, each pilot area approached the structure in different ways, some more effectively and successfully than others. It was DCFS funded initiative but not via the 'usual' channels (such as Find Your Talent or Creative Partnerships) but through an independent education contractor, Rex Hall Associates. In the current climate who can say what will happen to these kinds of initiatives. However if you'd like to read our response to the programme you can download it *here* UPDATE: I have learned that Rex Hall sadly passed away on May 31st. My experience of working with him was brief but so inspiring to see first hand the difference one person can make. My thoughts and wishes go to all of those close to him. |

Details

...BlogI'm most interested in how the public, your public, whoever that may be, engages with culture and creativity.

And if it nurtures creativity and develops personal, social or professional skills I'm absolutely all ears. Categories

All

Archives

May 2023

|

RSS Feed

RSS Feed